Tracing OpenAI Functions with Graphsignal

By Dmitri Melikyan |

| 3 min read

Learn how to trace, monitor and debug OpenAI function calling in production and development.

OpenAI Function Calling

The OpenAI function calling API, together with the latest GPT-3.5 model, provides a more reliable way to generate function calls. By declaring a set of function specifications and a prompt that has relevant context, such as domain knowledge or factual information, the model can generate a function call specification with propagated parameter values.

It is essential to have visibility into every step, from specifying a set of functions and context to executing the generated function call, to ensure the expected behavior for application users. Due to its tracing capabilities and native OpenAI API support, Graphsignal is a natural fit for tracing and monitoring OpenAI function calling end-to-end.

Tracing OpenAI Function Calling with Graphsignal

Graphsignal automatically traces and monitors OpenAI calls. It's only necessary to initialize it by providing a Graphsignal API key and a deployment name. To trace other functions, e.g. request handlers, a decorator or a context manager can be used. I will show how to do it in the following example. You can also check out the Quick Start for complete setup instructions.

Here is a simple example demonstrating how to add the Graphsignal tracer to an application that uses OpenAI functions (full example can be found here here). The code is based on the OpenAI's example from the Function Calling documentation. To add Graphsignal, I initialized the module, wrapped the function that the code is going to call based on OpenAI's response (optional), and also wrapped the request handler for convenience (optional), so that we can see all steps in a single trace. I call run_conversation function multiple times, simulating requests.

import os

import openai

import json

import graphsignal

+# Provide GRAPHSIGNAL_API_KEY environment variable or set `api_key` argument

+graphsignal.configure(deployment='openai-functions-example')

def get_current_weather(location, unit="fahrenheit"):

"""Get the current weather in a given location"""

weather_info = {

"location": location,

"temperature": "72",

"unit": unit,

"forecast": ["sunny", "windy"],

}

return json.dumps(weather_info)

+@graphsignal.trace_function

def run_conversation():

# Step 1, send model the user query and what functions it has access to

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=[{"role": "user", "content": "What's the weather like in Boston?"}],

functions=[

{

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]},

},

"required": ["location"],

},

}

],

function_call="auto",

)

message = response["choices"][0]["message"]

# Step 2, check if the model wants to call a function

if message.get("function_call"):

function_name = message["function_call"]["name"]

function_args = json.loads(message["function_call"]["arguments"])

# Step 3, call the function

# Note: the JSON response from the model may not be valid JSON

+with graphsignal.trace('get_current_weather') as span:

function_response = get_current_weather(

location=function_args.get("location"),

unit=function_args.get("unit"),

)

+span.set_payload('location', function_args.get("location"))

+span.set_payload('response', function_response)

# Step 4, send model the info on the function call and function response

second_response = openai.ChatCompletion.create(

model="gpt-3.5-turbo-0613",

messages=[

{"role": "user", "content": "What is the weather like in boston?"},

message,

{

"role": "function",

"name": function_name,

"content": function_response,

},

],

)

return second_response(The Graphsignal API key can be found here).

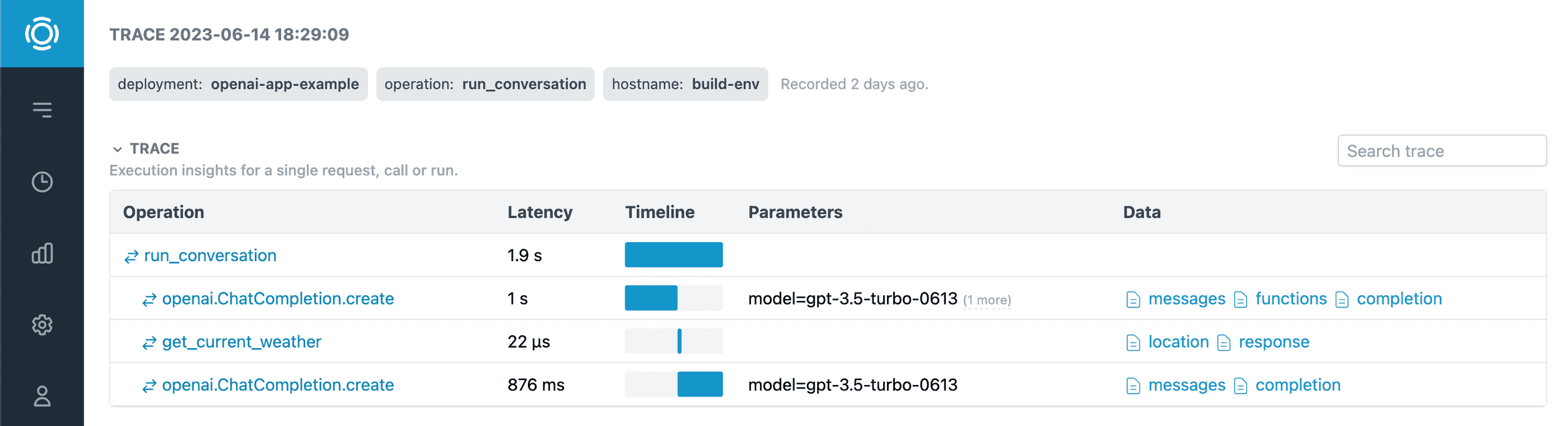

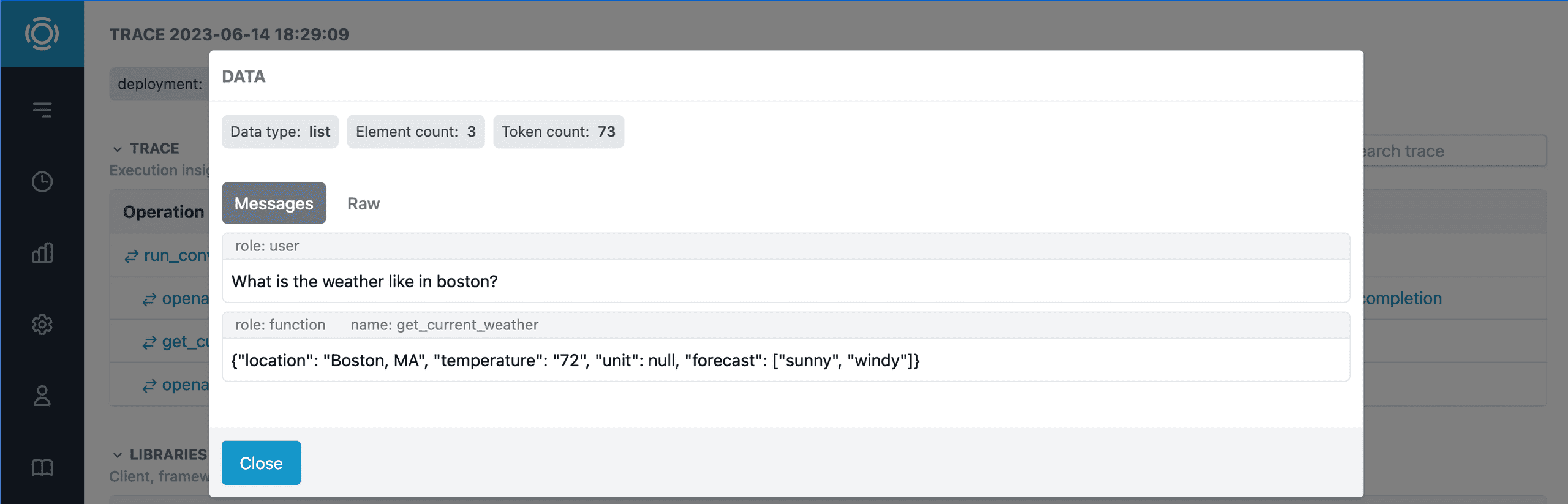

After the application is started, traces and metrics are automatically recorded and available on the dashboards. Here is an example of a trace.

Graphsignal also automatically tracks OpenAI API costs. Learn more about OpenAI cost tracking here.

Give it a try and let us know what you think. Follow us at @GraphsignalAI for updates.