Monitor OpenAI API Latency, Tokens, Rate Limits, and More

By Dmitri Melikyan |

| 2 min read

Learn how to monitor and troubleshoot OpenAI API based applications in production using Graphsignal.

OpenAI APIs in Production Applications

OpenAI API is very easy to use, however, there are few challenges when it comes to production applications. Some of the production aspects of using the API include latency, rate limits and costs. The use of the API should be designed with these in mind. It includes properly managing rate limits, retrying requests, making sure completions fit within max_tokens limit, etc. Here is a couple of useful guides directly from OpenAI that explain many of the solutions.

Additionally, to make sure the use of API is continuously reliable and scalable in the constantly evolving production environments, visibility into API performance including data statistics is necessary. A good start would be logging some requests or reporting metrics.

Graphsignal offers a much simpler and at the same time a more specialized way to achieve visibility and observability of AI applications that use hosted inference APIs or serve models.

Monitoring OpenAI API Calls With Graphsignal

Graphsignal can automatically instrument and start tracing and monitoring OpenAI API calls. It's only necessary to setup Graphsignal tracer by providing Graphsignal API key and a deployment name. Sign up for a free account to get an API key.

import graphsignal

# Provide an API key directly or via GRAPHSIGNAL_API_KEY environment variable

graphsignal.configure(api_key='my-api-key', deployment='my-openai-app-prod')See the Quick Start for complete setup instructions.

NOTE: When streaming is used for completion or chat requests, Grapshignal tracer does not count prompt tokens by default and therefore such requests will be undercounted in cost metrics. To enable token counting for streaming, simply pip install tiktoken, and the tracer will be able to use it for counting. We also recommend to import titktoken somewhere in your code, otherwise the first API request will have an increased latency due to importing the tiktoken package for the first time.

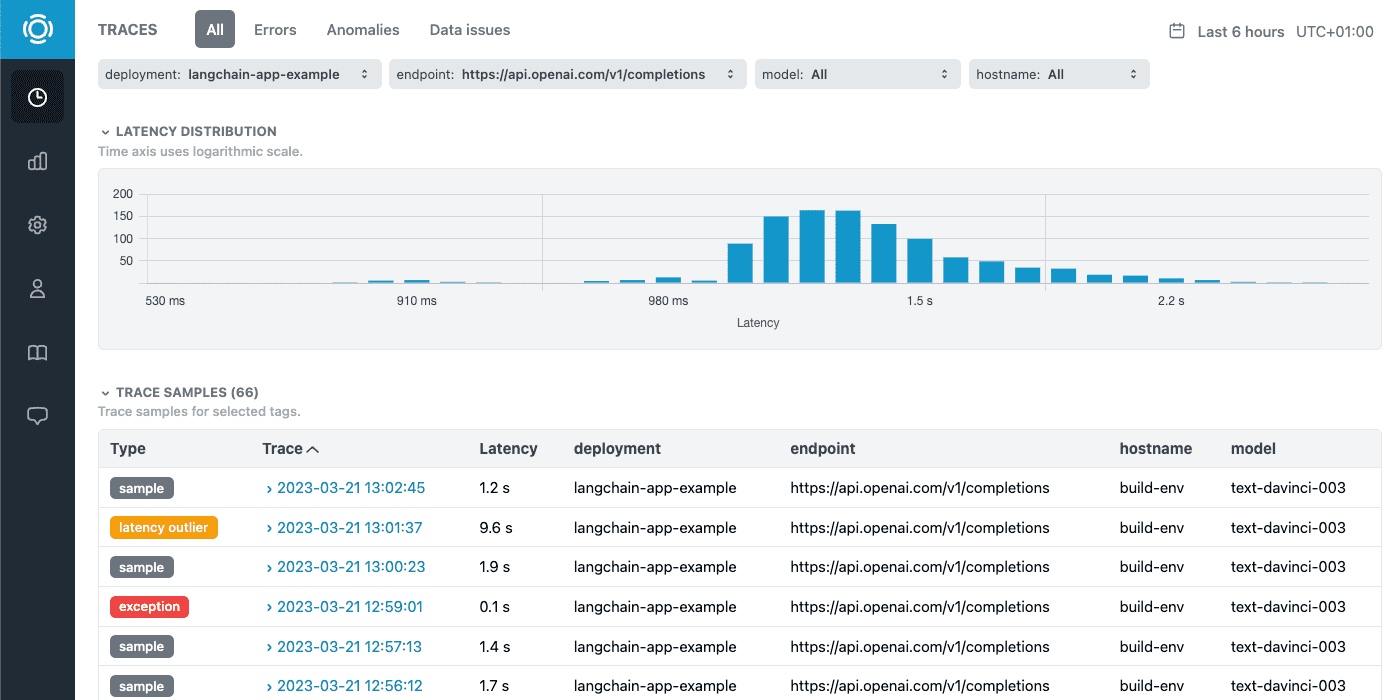

To demonstrate, I run this example app that makes OpenAI completion requests continuously. After running, sample traces and metrics are continuously recorded and available in the dashboard for analysis. Exceptions, such as RateLimitError, and latency outliers are also recorded automatically.

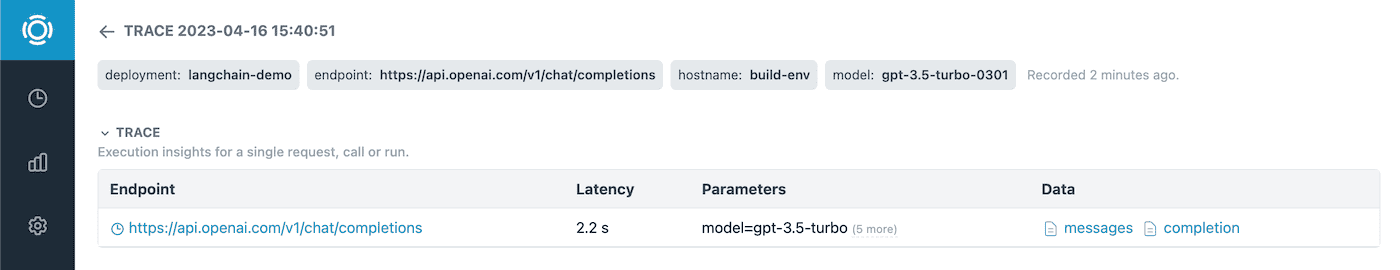

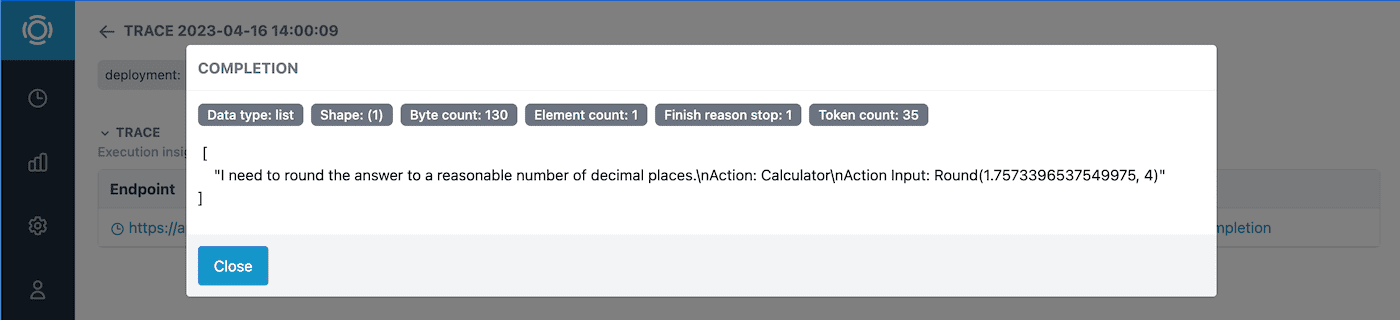

We can look into a particular trace sample to answer questions about slow latency, rate limits, see the data statistics of that call and whether it may have been the reason. Or, we may also want to check the token count and if the completion generation finish reason was length instead of a stop.

Optionally, prompts and completions can be recorded along with the data statistics. This is instrumental for troubleshooting errors and data issues. To enable, set record_data_samples to True in graphsignal.configure() method.

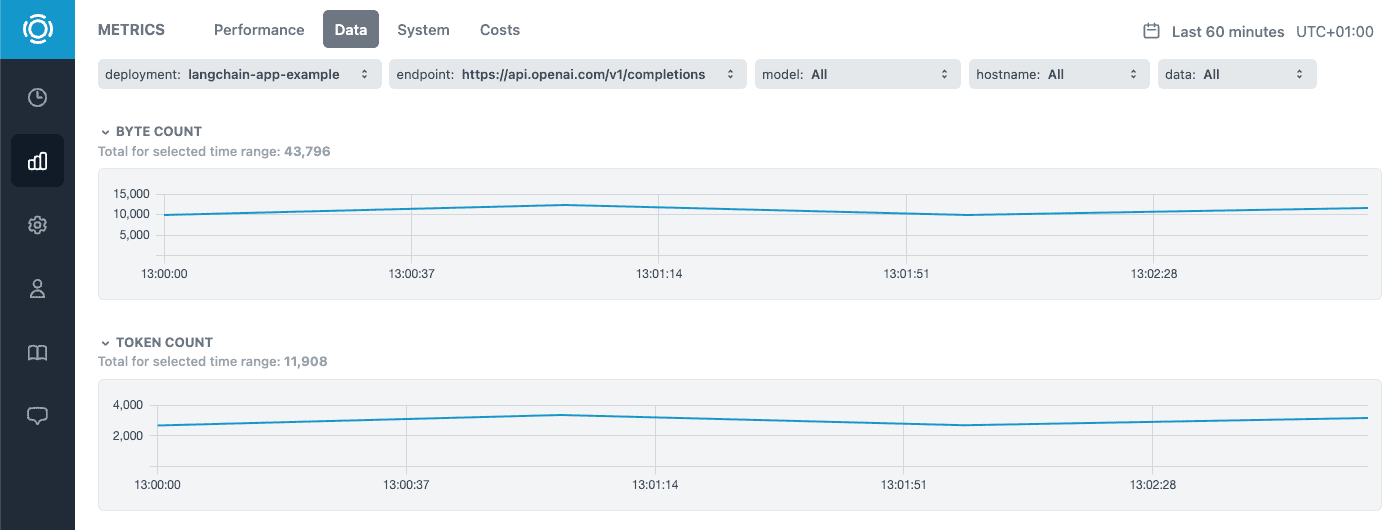

Additionally, performance, data metrics, resource utilization are available, to monitor applications over time and correlate any changes or issues.

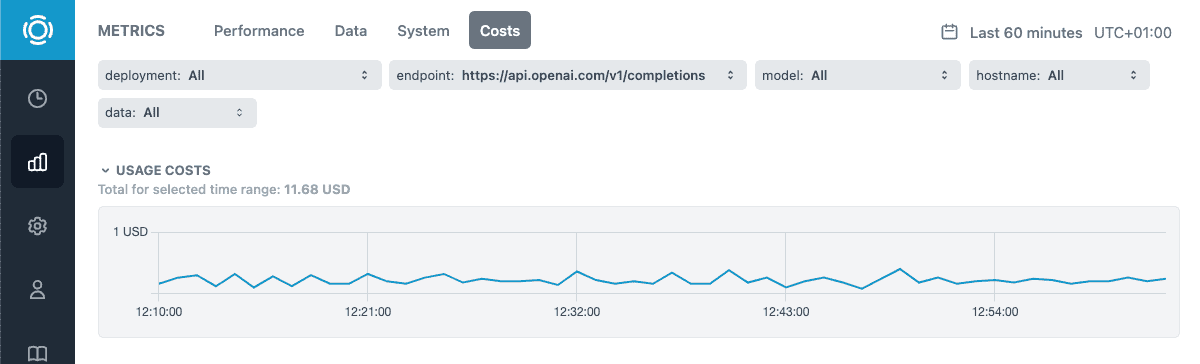

To analyze OpenAI API usage costs based on deployment, model and any custom tabs, Costs tab includes usage cost chart for selected filters and time range and a total for the time range.

Give it a try and let us know what you think. Follow us at @GraphsignalAI for updates.

Graphsignal also allows you to easily trace and monitor any function or code segment. See the Quick Start guide for instructions.