Measuring LLM Token Streaming Performance By Dmitri Melikyan |

Learn how to measure and analyze LLM streaming performance using time-to-first-token metrics and traces.

What is LLM Token Streaming

LLM Token Streaming is a feature of OpenAI API and other model APIs that allows for real-time, incremental processing of language output. Instead of receiving complete messages or blocks of text, users can now stream individual tokens, which are the fundamental units of language processing, back with the API. This enables more dynamic and interactive conversations, as tokens can be processed and returned incrementally, facilitating smoother communication between applications and the ChatGPT model. The streaming capability enhances the responsiveness and efficiency of the API, making it well-suited for applications requiring low-latency, and dynamic language-based interactions. Streaming transforms user experience with language models by making conversations faster and more interactive. Instead of waiting for the entire message, you now receive immediate responses in real-time.

Latency vs. Time-To-First-Token

Latency refers to the overall time delay between initiating a request and receiving a complete response. It encompasses various processing stages, including data transmission, computation, and any additional waiting time. On the other hand, time-to-first-token (TTFT) specifically measures the duration it takes for a language model to generate and return the initial token of its response after receiving user input. While latency provides a broader view of the entire response time, Time-To-First-Token focuses on the critical first step in delivering real-time and interactive feedback from the model.

Measuring and Analyzing Time-To-First-Token With Graphsignal

Measuring TTFT is crucial for optimization as it reveals the time spent on essential processes before generating the initial token. This includes model initialization, request processing, occasional cold boot, tokenization, attention mechanisms, and computational steps. In the context of language models, cold boot refers to the scenario where the model is starting up or being initialized from a state of inactivity.

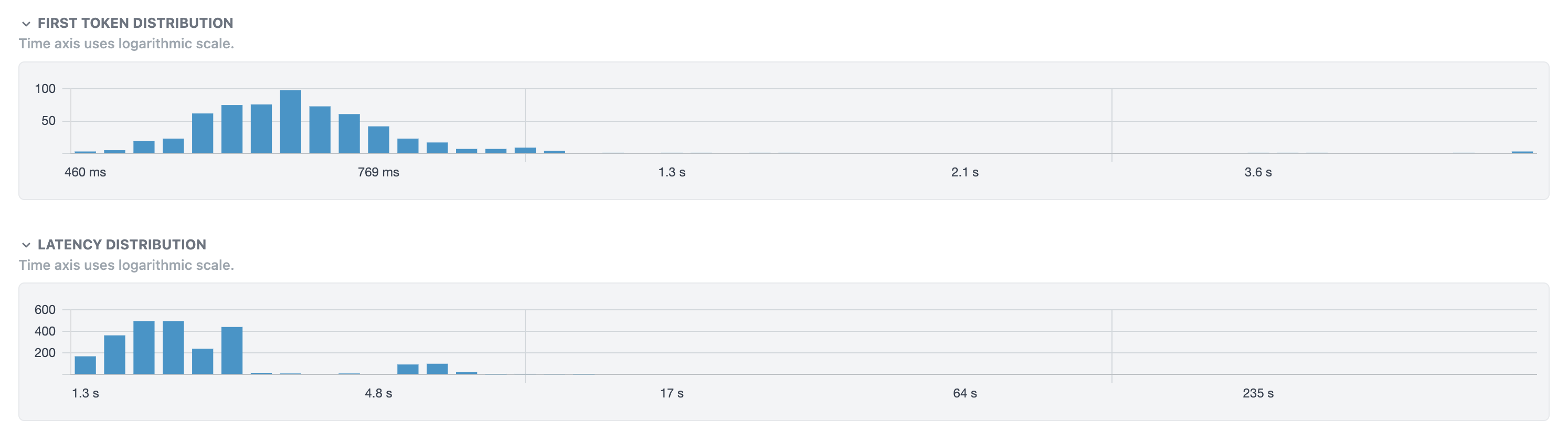

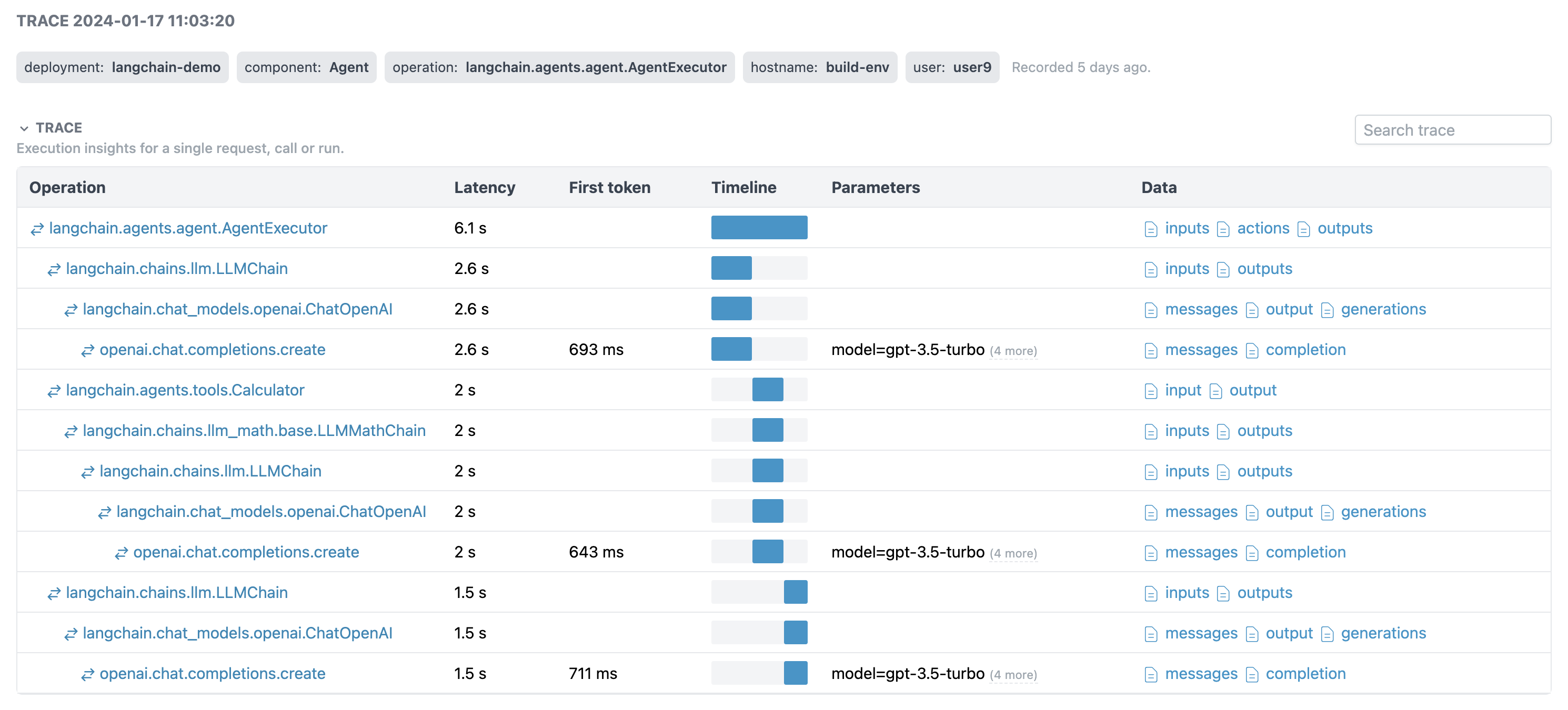

In addition latency metrics and breakdowns by operations, including LLM calls, Graphsignal provides TTFT percentiles, distributions and as trace information. To visualize and monitor this data, simply add Graphsignal to your application.

After starting the application, weather locally or in production, the TTFT will be available for analysis alongside various other metrics and trace information.

Give it a try and let us know what you think. Follow us at @GraphsignalAI for updates.