AI Application Monitoring and Profiling By Dmitri Melikyan |

Learn about challenges of running AI applications and how to address them with new generation of tools.

AI Applications vs. Traditional Applications

AI applications, whether simple model serving or inference jobs, differ from typical web and desktop applications; they are built around or include machine learning models. It sounds trivial, but has a substantial effect on applications, their deployment, monitoring and debugging.

- Machine learning frameworks are used to run model inference. A model may run with PyTorch or TensorFlow directly, or it can be compiled to various formats, such as TorchScript or ONNX, for improved performance and reduced size. Current monitoring and profiling tools are unaware of those frameworks and runtimes, and therefore do not provide the necessary visibility to optimize models and/or detect issues.

- Related to the previous point, some model inference applications rely on GPUs or other accelerators to run large models. Again, current monitoring tools are not able to provide visibility into this hardware, as it hasn't been used in server applications to such extent previously.

- Models are data-driven; the model output is fully dependent on input data. This leads to a new problem: issues with model input may cause wrong output without throwing exceptions. These silent failures may stay undetected for weeks. This is also the case with non-ML applications, but to a much lesser extent.

AI Application Observability

Observability is a very familiar concept for DevOps and SRE teams. It is usually defined by its pillars: logs, metrics and traces. It would be fair to add profiles to the pillars, since they have proved to be instrumental and are now offered by many APM tools. I was fortunate to build one of the first production profilers, called StackImpact, and experienced first hand how evolving technology stacks require new tools to ensure performance and reliability.

Following the same logic, to cover AI applications and address the challenges presented above, the observability scope should be extended.

- The monitoring and profiling tools need to support ML frameworks, runtimes and hardware. Without it, the visibility is limited. This also applies to integrations with specialized model servers, such as TorchServe, Seldon and others.

- Model input and output data should be monitored for issues. Per-inference data profiles provide valuable context for troubleshooting model failures. Whether models fail silently or there in an exception, it is instrumental to have a data profiles/statistics available.

- Model performance metrics can also be helpful for reducing MTTR. However, model accuracy is rarely available in real time due to delayed ground truth or feedback. I discuss this in more details in the next section.

There are other aspects not covered here that may require special approach to monitoring. One example would be inference on edge devices.

Application Monitoring vs. Model Monitoring

Model performance, e.g. accuracy, is another aspect to consider in context of application observability. It is useful in the context of application monitoring, but only if model accuracy is available soon enough to detect and troubleshoot real-time issues. In practice, this is rarely the case.

Therefore, I consider model monitoring a part of model development and/or business analytics, rather than AI application and infrastructure monitoring. Additionally, model monitoring implementations are use case specific by nature.

The same logic can be applied to data drift and concept drift monitoring. If the drift is sudden and indicates a technical problem, detecting it will contribute to reducing MTTR and thus to reliability. Otherwise, e.g. for seasonal drift, it should be considered a part of model development, in the scope of adjusting the model to real world. Interestingly, as authors of this interview study note: "Surprisingly, participants didn’t seem too worried about slower, expected natural data drift over time — they noted that frequent model retrains solved this problem".

The New Generation of Monitoring Tools

While traditional tools, like Prometheus and Grafana, are being used to monitor AI applications, as discussed above, they do not cover ML-specific aspects and therefore provide limited visibility for troubleshooting and optimizing model inference.

As far as the model performance is concerned, a number of startups are working on model monitoring solutions to help ML practitioners with improving and troubleshooting models on real world data.

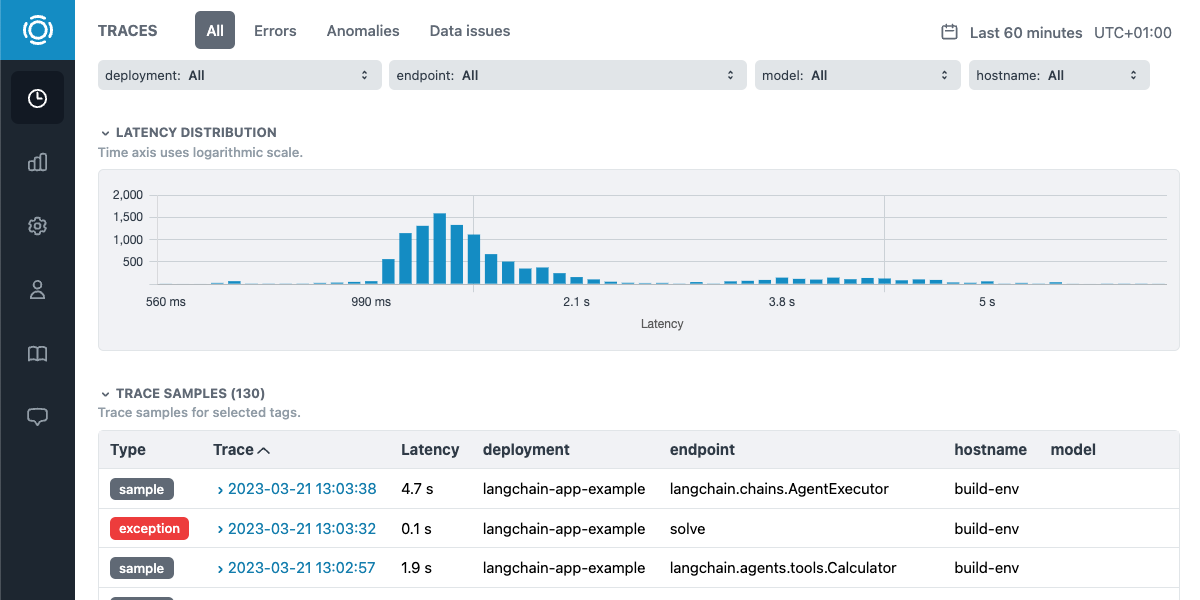

In turn, we at Graphsignal are building tools to address observability challenges of AI applications and help engineers and SREs manage their AI stacks with confidence.